HackEye Segmentation

HackEye Segmentation was a project to segment organs within human MRI scans. We were specifically interested in segmenting three tissues, the left eye (LE), right eye (RE), and brain. In order to do this we trained a convolutional neural network on a dataset of 987 MRI scans.

The Team

Team Members: Olivia Sandvold and Chase Johnson

The Data

We were provided a dataset of 981 subjects. Each subject had a t1 and t2 weighted image, as well as a segmentation mask of the brain and a fcsv file containing fiducial points that where generated via a pre-existing algorithm.

# Example files for a single subject:

0123_45678.fcsv # fiducial csv file

0123_45678_t1w.nii.gz # t1 weighted image

0123_45678_t2w.nii.gz # t2 weighted image

0123_45678_seg.nii.gz # provided segmentation mask of the brainIt is important to know that these points represent the physical space location of the fiducial in a Right Aanterior Superior (RAS) coordinate frame. As where our t1, t2, and segmentation image were in Left Posterieur Superior coordinates (LPS).

# the fiducials we used to create masks for the left eye and right eye

LE,-32.9635972009305,57.9471033061737,-21.105974149604894

RE,30.775787784712914,59.98414825112046,-21.68273735422315Preprocessing

We used an image processing library called SimpleITK to manipulate our images and pandas to manipulate the fcsv files. We used the RE and LE finducial points from each subjects fcsv file to generate labels of the left and right eye via radial expansion from the fiducial and intensity thresholding. We then added the eye and brain masks together to get our final label mask. The t1 and t2 images had already been intensity normalized and bias field corrected, so no further cleaning was done.

Training

We used a deep learning framework design specifically for medical imaging called NiftyNet, which is built on Tensorflow, in order to train our model, evaluate it’s performance and run inference on new data. We trained our model for 30000 interations overnight, which took approximately 8 hours.

Result

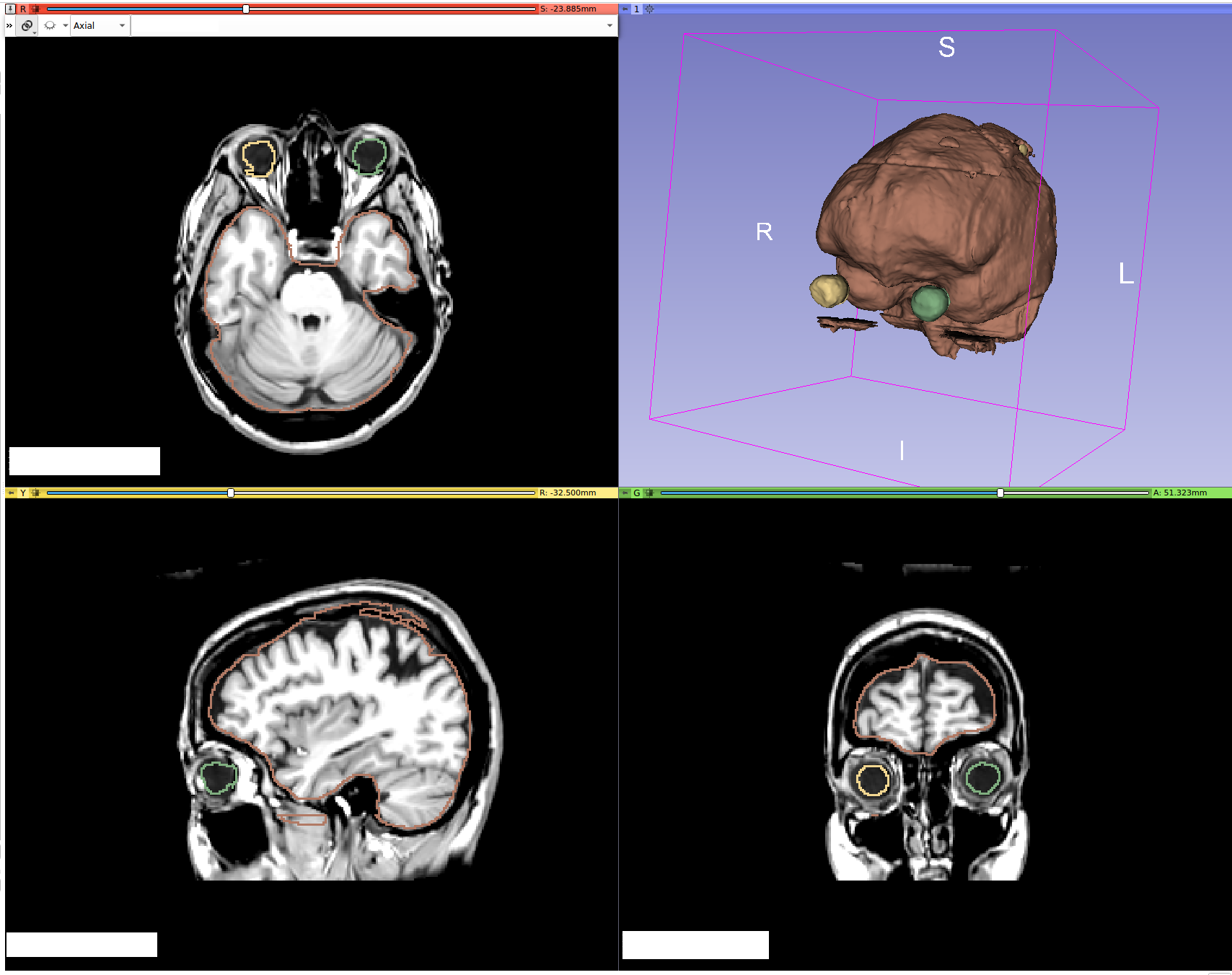

We used holdout cross validation to validate our results. Below is a picture of the results we where able to achieve. Below is the segmentation result on holdout data.

Learn more on the DevPost page, and the image manipulation code is available on GitHub.